Beyond Simple Detection: Building Resilient Digital Systems

In our increasingly data-driven world, the integrity of information is paramount. From financial transactions to critical scientific research and the very fabric of our communication networks, errors in data can have devastating consequences. While error detection alerts us to a problem, it’s error-correcting codes (ECC) that provide the robust solutions to not only identify but also automatically fix these digital imperfections. This article delves into the fundamental principles, crucial importance, and multifaceted applications of ECC, exploring its technical nuances, inherent tradeoffs, and practical implications for a wide range of industries and individuals.

The need for reliable data transmission and storage has never been more acute. Noise, interference, physical degradation of storage media, and even software glitches can introduce errors into the binary streams that underpin our digital lives. Without effective error correction, the reliability of systems would be severely compromised, leading to data loss, incorrect computations, and a breakdown in communication. ECC represents a sophisticated layer of redundancy, intelligently woven into data, to counteract these pervasive threats.

Why Error-Correcting Matters and Who Should Care

The significance of error-correcting codes cannot be overstated. They are the silent guardians of data, ensuring that the information we send and receive, or store and retrieve, remains accurate and trustworthy. The consequences of data corruption can range from minor annoyances to catastrophic failures:

- Financial Systems: Inaccurate transaction records can lead to severe financial losses and erosion of confidence.

- Healthcare: Errors in patient records, diagnostic images, or treatment plans can have life-threatening implications.

- Scientific Research: Corrupted experimental data can invalidate years of work and lead to erroneous scientific conclusions.

- Communication Networks: Garbled messages or dropped packets disrupt communication, impacting everything from voice calls to critical control systems.

- Data Storage: Bit rot on hard drives, flash memory, or archival tapes can render vital data inaccessible or corrupted over time.

- Aerospace and Automotive: Critical control systems in aircraft and vehicles rely on flawless data transmission for safety.

Essentially, anyone who relies on digital information, which by extension means almost everyone, benefits from the invisible work of ECC. Engineers designing communication protocols, software developers building robust applications, system administrators managing data centers, and even end-users of consumer electronics are all indirectly beneficiaries. The robust performance of your smartphone, the reliability of cloud storage, and the accuracy of online banking are all underpinned by the principles of error correction.

Background and Context: The Evolution of Data Reliability

The concept of error control in communication systems dates back to the early days of telegraphy. However, the formalization and widespread application of error-correcting codes began in the mid-20th century with the advent of digital computing and telecommunications. Claude Shannon, often called the father of information theory, laid the theoretical groundwork in his seminal 1948 paper, “A Mathematical Theory of Communication.” Shannon proved the existence of codes that could achieve reliable communication over noisy channels, provided the transmission rate was below a certain capacity limit.

Early practical implementations often involved simpler error detection techniques, such as parity checks. A parity bit is added to a binary message to make the total number of ‘1’s either even or odd. While effective at detecting single-bit errors, parity checks cannot correct errors and fail to detect some multi-bit errors. As communication channels became more complex and data volumes exploded, the need for more sophisticated error correction became apparent.

The development of specific ECC algorithms has been a continuous process. Key milestones include:

- Hamming Codes (1950): Richard Hamming developed the first practical error-correcting code, capable of detecting and correcting single-bit errors.

- BCH Codes (1960s): Bose-Chaudhuri-Hocquenghem codes are a class of cyclic error-correcting codes that can correct multiple random errors.

- Reed-Solomon Codes (1960s): Developed by Irving Reed and Gustave Solomon, these codes are particularly effective at correcting burst errors (multiple consecutive errors), making them invaluable in applications like CD/DVD players and digital broadcasting.

- LDPC Codes (Low-Density Parity-Check) (1963, rediscovered 2000s): Robert Gallager introduced LDPC codes, which approach the theoretical limits of channel capacity but were computationally intensive for their time. Their rediscovery and improved decoding algorithms have made them a dominant force in modern communication standards like Wi-Fi and 5G.

- Turbo Codes (1993): Achieved near-Shannon-limit performance and have been widely adopted in mobile communication standards.

The choice of ECC algorithm is heavily dependent on the characteristics of the communication channel or storage medium, the desired level of reliability, and the available computational resources.

How Error-Correcting Codes Work: The Principle of Redundancy

At its core, error correction involves adding carefully crafted redundant bits to the original data. These redundant bits are not mere copies; they are calculated based on specific mathematical relationships with the original data bits. When the data is received or read, these relationships are re-evaluated. If inconsistencies are found, the ECC mechanism can not only pinpoint the location of the error but also reconstruct the correct data bits.

There are two primary categories of ECC:

- Block Codes: These codes operate on fixed-size blocks of data. Each block of k information bits is transformed into a larger block of n bits (codeword), where n > k. The difference, n-k, represents the number of redundant bits. Examples include Hamming codes, BCH codes, and Reed-Solomon codes.

- Convolutional Codes: These codes operate on data streams of arbitrary length. Redundant bits are generated by a sliding window mechanism (a convolutional encoder) over the input bits. They are often used in continuous data streams like voice or video.

The process typically involves:

- Encoding: The original data bits are fed into an encoder, which generates the additional parity or redundant bits based on the specific ECC algorithm. This augmented data (codeword) is then transmitted or stored.

- Decoding: The received or retrieved data is processed by a decoder. The decoder uses the redundant bits to check the integrity of the data. If errors are detected, the decoder attempts to correct them by inferring the most likely original data bits.

The ability to correct errors depends on the error-correcting capability of the code, which is directly related to the number of redundant bits added. More redundancy generally means better error correction but also higher overhead (more bits to store or transmit).

Applications of Error-Correcting Codes in Action

ECC is ubiquitous, underpinning many of the digital technologies we rely on daily:

- Data Storage:

- Hard Disk Drives (HDDs) & Solid State Drives (SSDs): ECC is crucial for detecting and correcting errors that occur on the magnetic platters or NAND flash memory cells. Without it, data corruption would be rampant.

- Optical Media (CDs, DVDs, Blu-rays): Reed-Solomon codes are heavily used to combat scratches and defects on discs, allowing for reliable playback.

- RAID (Redundant Array of Independent Disks): Certain RAID configurations use ECC principles to reconstruct data from failed drives.

- Telecommunications:

- Wireless Communication (Wi-Fi, Cellular Networks): LDPC and Turbo codes are essential for reliable data transmission over noisy and fading wireless channels.

- Satellite Communications: Robust ECC is needed to overcome the long distances and potential interference experienced in satellite links.

- Ethernet and Network Infrastructure: ECC is employed in high-speed networking equipment to ensure data integrity.

- Data Transmission:

- Digital Broadcasting (TV, Radio): Ensures viewers and listeners receive clear signals without glitches.

- Deep Space Communication: NASA employs powerful ECCs like Reed-Solomon and convolutional codes for missions like the Voyager probes and Mars rovers, where communication is slow and prone to errors over vast distances.

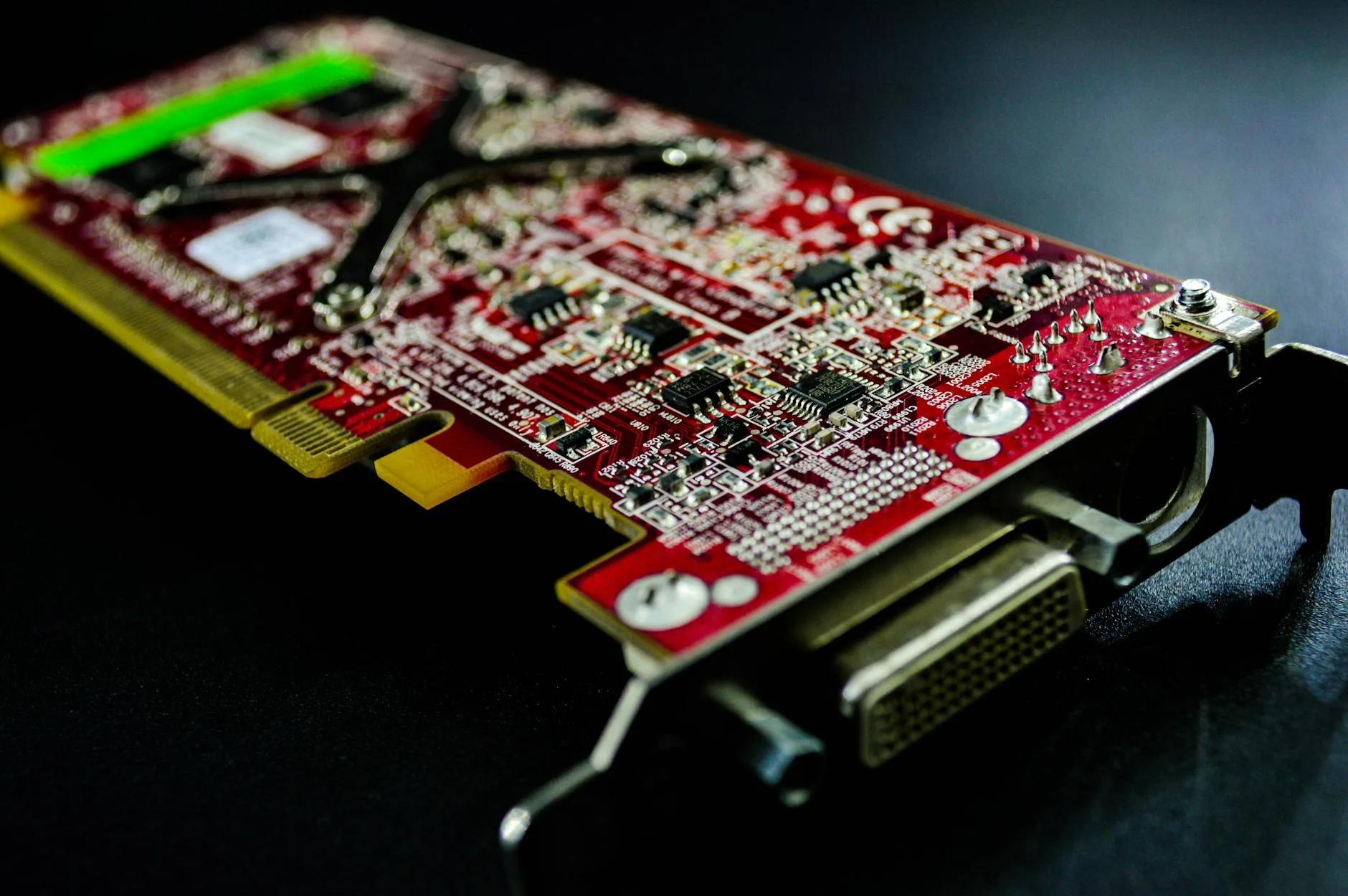

- Computing:

- RAM Modules: ECC RAM, often found in servers and workstations, uses parity bits to detect and correct single-bit errors in memory, preventing crashes and data corruption.

- Data Centers: Large-scale data storage and transmission within data centers heavily rely on ECC to maintain the integrity of vast datasets.

The widespread adoption of specific codes like LDPC and Reed-Solomon is a testament to their efficacy and adaptability across diverse environments.

Tradeoffs and Limitations: The Cost of Correctness

While indispensable, ECC is not without its compromises:

- Overhead: The most significant tradeoff is the addition of redundant bits. This increases the storage space required or the bandwidth needed for transmission. For example, a code that adds 30% redundancy means that for every 100 bits of original data, you store/transmit 130 bits.

- Computational Complexity: Encoding and decoding processes require processing power. While modern processors are highly capable, complex ECC algorithms can still introduce latency or consume significant computational resources, especially in real-time applications or high-throughput systems.

- Limited Correction Capability: No ECC can correct an infinite number of errors. Each code has a maximum number of errors it can reliably detect and correct. If the number of errors exceeds this limit, the decoder may fail or even introduce more errors.

- Assumptions about Error Distribution: Many ECC algorithms are designed assuming random errors. If errors occur in bursts (multiple consecutive bits corrupted), different types of codes (like Reed-Solomon) are needed, and performance can still be impacted.

- Complexity of Implementation: Designing and implementing robust ECC systems can be technically challenging, requiring specialized expertise.

The choice of ECC is therefore a delicate balancing act between the desired level of reliability, acceptable overhead, available processing power, and the expected nature of errors.

Practical Advice and Cautions: Implementing and Choosing ECC

For those involved in designing or managing systems where data integrity is crucial, consider the following:

- Understand Your Environment: What are the expected error rates and patterns? Are errors likely to be random or bursty? This dictates the type of ECC that will be most effective.

- Quantify Reliability Needs: What is the acceptable probability of data corruption? This will determine the level of redundancy required.

- Assess Computational Resources: Can your system handle the processing demands of complex encoding and decoding algorithms?

- Consider Existing Standards: For common applications like networking or storage, leverage well-established ECC algorithms and standards that have been proven in the field.

- Test Thoroughly: Before deploying any ECC solution, conduct rigorous testing under realistic conditions to validate its performance.

- Beware of Over-Correction: While strong ECC is good, excessively high redundancy can lead to diminishing returns in terms of reliability while significantly increasing overhead.

- Hardware vs. Software ECC: ECC can be implemented in hardware (e.g., ECC RAM, ASICs) for maximum speed, or in software for flexibility. The choice depends on performance requirements and system architecture.

For end-users, particularly in enterprise environments, understanding whether your storage, memory, or network infrastructure supports ECC can be a factor in assessing the overall reliability and robustness of your systems. Always consult manufacturer specifications and consider ECC features when purchasing critical hardware.

Key Takeaways of Error-Correcting Codes

- ECC is foundational for digital reliability: It goes beyond detection to actively fix errors in data.

- Redundancy is the core principle: Carefully calculated extra bits enable error correction.

- Ubiquitous in modern tech: Found in storage, communication, and computing systems.

- Tradeoffs are inevitable: Balancing reliability with overhead (storage/bandwidth) and computational cost is crucial.

- Algorithm choice matters: Different codes (Hamming, Reed-Solomon, LDPC, Turbo) suit different error profiles and applications.

- Continuous evolution: Research constantly pushes the boundaries of efficiency and performance.

References

- Shannon, C. E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal, 27(3), 379-423.

The foundational paper in information theory, proving the theoretical possibility of reliable communication over noisy channels.

- Hamming, R. W. (1950). Error detecting and error correcting codes. Bell System Technical Journal, 29(2), 147-160.

Introduced the concept of single-bit error correction with the widely cited Hamming code.

- Lin, S., & Costello Jr, D. J. (2004). Error Control Coding. Prentice Hall.

A comprehensive textbook offering in-depth coverage of various error-correcting code families and their principles.

- Gallager, R. G. (1963). Low-density parity-check codes. IRE Transactions on Information Theory, 8(1), 21-26.

Introduced LDPC codes, which later gained prominence due to their near-optimal performance.