Beyond Bits: The Dawn of Quantum Computing and the Revolution it Promises

Unlocking the Universe’s Secrets, One Qubit at a Time

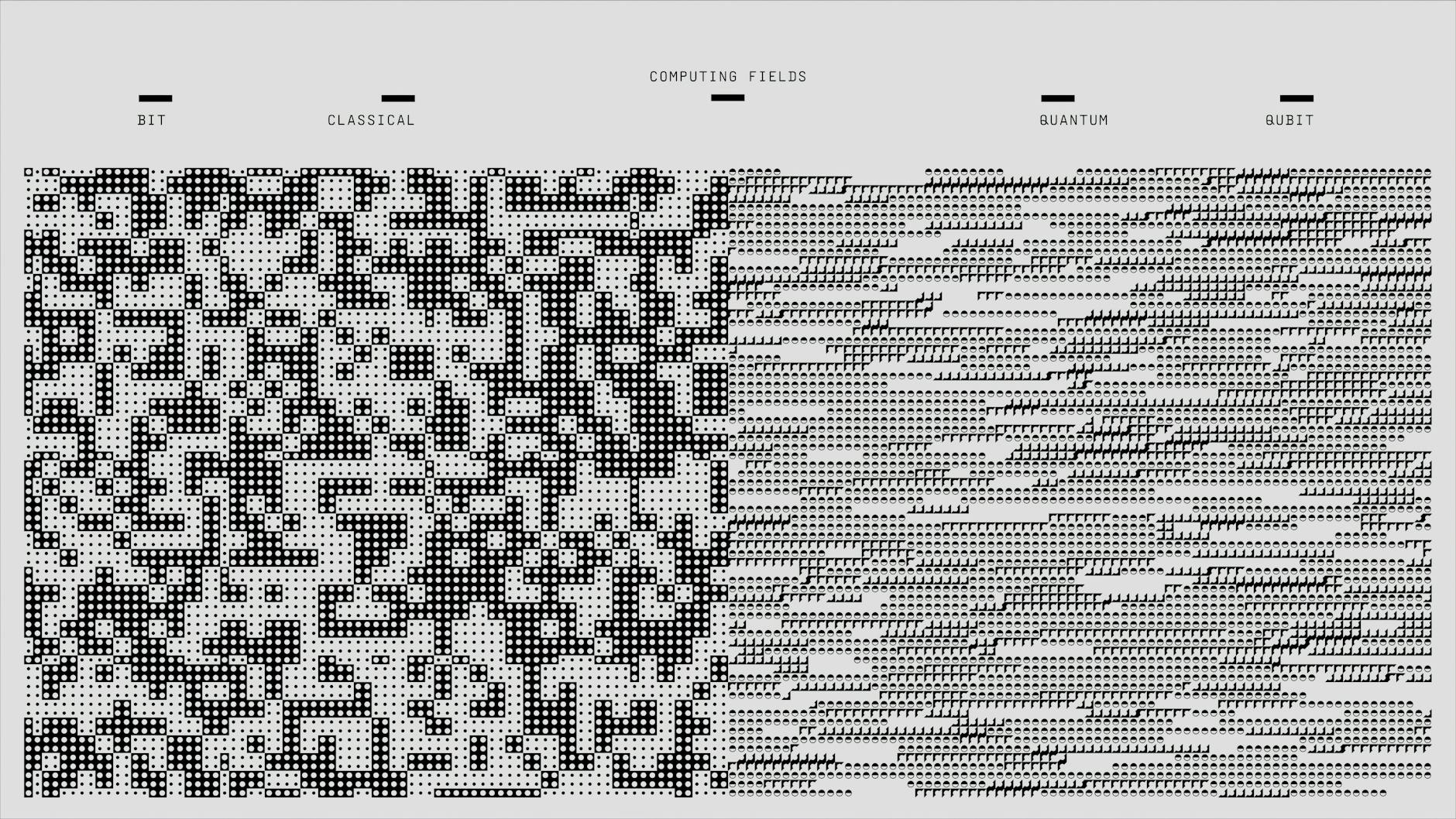

For decades, our digital world has been built on the foundation of classical computing. From the smartphones in our pockets to the supercomputers powering scientific research, these machines operate on a fundamental principle: bits. These bits, representing either a 0 or a 1, are the building blocks of all information processing we know. But what if there was a fundamentally different way to compute, one that could tackle problems currently insurmountable for even the most powerful classical computers? Enter quantum computing, a nascent field that leverages the bizarre and counter-intuitive laws of quantum mechanics to perform calculations in ways that were once the realm of science fiction.

The promise of quantum computing is immense, hinting at breakthroughs in fields ranging from drug discovery and materials science to financial modeling and artificial intelligence. It’s a technology that’s still in its early stages, often described as being in a similar developmental phase to classical computing in the 1950s. Yet, the pace of innovation is accelerating, with major tech companies, governments, and academic institutions pouring billions into research and development. This guide aims to demystify quantum computing, exploring its core concepts, its potential applications, its current limitations, and what the future might hold.

Context & Background: From Classical Limits to Quantum Possibilities

To understand quantum computing, it’s essential to first appreciate the limitations of its classical predecessor. Classical computers, at their core, manipulate information through transistors acting as switches. These switches are definitively either on (1) or off (0). While incredibly sophisticated in their sequencing and parallel processing, they are fundamentally bound by this binary logic. For certain types of problems, particularly those involving an exponentially increasing number of possibilities, classical computers quickly reach their computational limits.

Consider, for example, the problem of simulating the behavior of molecules. The interactions between atoms and electrons are governed by the laws of quantum mechanics, which are inherently probabilistic and complex. Simulating even a moderately sized molecule requires a number of classical bits that grows exponentially with the size of the molecule. This is why drug discovery, a field heavily reliant on understanding molecular interactions, has historically been a slow and painstaking process, often relying on trial and error.

The theoretical underpinnings of quantum computing were laid out in the early 1980s. Physicists like Richard Feynman suggested that to truly simulate quantum systems, we needed a computer that itself operated on quantum principles. Later, in the 1990s, mathematicians like Peter Shor developed algorithms that demonstrated the potential power of quantum computation. Shor’s algorithm, for instance, showed that a quantum computer could factor large numbers exponentially faster than any known classical algorithm. This was a pivotal moment, as factoring large numbers is the cryptographic basis for much of modern online security. A sufficiently powerful quantum computer could, in theory, break these encryption methods.

This realization spurred a significant increase in research and investment. Early quantum computers were rudimentary, often consisting of just a few qubits, and highly susceptible to errors. However, through persistent effort and innovation, the field has progressed to the point where researchers are building increasingly robust and complex quantum processors. The journey from theoretical curiosity to tangible technology has been long, but the potential rewards have kept the momentum going.

In-Depth Analysis: The Building Blocks of Quantum Computation

The heart of quantum computing lies in its departure from classical bits. Instead, quantum computers use qubits (quantum bits). Unlike a classical bit, which must be either 0 or 1, a qubit can exist in a state of superposition. This means it can be 0, 1, or a combination of both simultaneously. Imagine a spinning coin before it lands; it’s neither heads nor tails, but in a probabilistic state of both. A qubit can be represented as a vector in a two-dimensional complex vector space, with the basis states |0⟩ and |1⟩ corresponding to the classical 0 and 1.

The power of superposition comes into play when we consider multiple qubits. If we have two classical bits, they can represent four states (00, 01, 10, 11) at any given time, but only one at a time. With two qubits, thanks to superposition, they can exist in all four of these states simultaneously. As the number of qubits increases, the number of states they can represent grows exponentially. A quantum computer with ‘n’ qubits can represent 2n states simultaneously. This exponential scaling is what gives quantum computers their potential to solve problems that are intractable for classical machines.

Another crucial quantum phenomenon is entanglement. When two or more qubits become entangled, they are intrinsically linked, regardless of the distance separating them. Measuring the state of one entangled qubit instantaneously influences the state of the other(s). Einstein famously referred to this as “spooky action at a distance.” In quantum computing, entanglement allows qubits to work in concert, amplifying the computational power. For example, if two qubits are entangled, and we measure one to be in the |0⟩ state, we instantly know the state of the other entangled qubit, even if it’s miles away.

Quantum computation is performed by manipulating these qubits using quantum gates, which are the quantum analogues of classical logic gates. While classical gates perform operations like AND, OR, and NOT, quantum gates perform operations like the Hadamard gate (which creates superposition) and CNOT gates (which can create entanglement). A sequence of these quantum gates applied to qubits constitutes a quantum algorithm. The computation progresses through these manipulations, and at the end, a measurement is performed to collapse the superposition into a definitive classical outcome.

However, the very quantum properties that give quantum computers their power also make them incredibly delicate and prone to errors. Decoherence is a major challenge. Qubits are sensitive to environmental noise – vibrations, temperature fluctuations, electromagnetic fields – which can disrupt their quantum states and cause them to lose their superposition or entanglement. Maintaining the coherence of qubits for long enough to perform complex computations is a significant engineering hurdle.

There are several leading approaches to building qubits, each with its own set of advantages and disadvantages:

- Superconducting Qubits: These are tiny superconducting circuits cooled to near absolute zero. They are relatively easy to fabricate using existing semiconductor manufacturing techniques and have shown promise for scalability. Companies like Google and IBM are heavily invested in this technology.

- Trapped Ions: Individual atoms are held in place using electromagnetic fields, and their electronic states serve as qubits. These systems tend to have very long coherence times and high fidelity operations, but scaling them up can be challenging. IonQ is a prominent player in this space.

- Photonic Qubits: Information is encoded in photons (particles of light). These are attractive because photons are robust and can travel long distances, but creating entanglement and performing complex gates can be difficult. PsiQuantum is a notable company pursuing this approach.

- Topological Qubits: This more theoretical approach aims to encode quantum information in the topological properties of matter, making them inherently more resistant to noise. Microsoft is a key proponent of this method.

The number of qubits in a quantum computer is a significant metric, but equally important is the quality of those qubits and the ability to perform operations with high fidelity. A system with a few high-quality, well-connected qubits is often more powerful than a larger system with many noisy qubits.

Pros and Cons: Navigating the Quantum Landscape

The potential benefits of quantum computing are transformative, but the challenges are equally significant. Understanding both sides is crucial for a balanced perspective.

Pros:

- Solving Intractable Problems: Quantum computers can tackle problems that are currently impossible for even the most powerful supercomputers. This includes complex simulations of molecules, optimization problems, and breaking certain types of modern encryption.

- Accelerated Discovery and Innovation:

- Drug Discovery and Development: Simulating molecular interactions with unprecedented accuracy can lead to the design of new drugs and therapies much faster.

- Materials Science: Designing new materials with specific properties, such as superconductors or more efficient catalysts, by understanding their atomic and electronic behavior.

- Financial Modeling: Optimizing portfolios, detecting fraud, and managing risk with greater precision.

- Artificial Intelligence: Enhancing machine learning algorithms, particularly in areas like pattern recognition and optimization, potentially leading to more powerful AI.

- Cryptography: While quantum computers pose a threat to current encryption (e.g., RSA), they also offer the potential for new, quantum-resistant cryptographic methods.

- Logistics and Optimization: Solving complex optimization problems, such as supply chain management or traffic flow, could lead to significant efficiencies.

Cons:

- High Cost and Complexity: Building and maintaining quantum computers requires highly specialized equipment, extreme environmental controls (like near-absolute zero temperatures), and a deep understanding of quantum physics.

- Error Rates and Decoherence: Qubits are extremely sensitive to environmental noise, leading to errors. Correcting these errors (quantum error correction) is a major ongoing research area and requires a significant overhead in terms of qubits.

- Limited Scalability: While the number of qubits is increasing, building systems with a large number of stable, interconnected, and controllable qubits remains a formidable engineering challenge.

- Algorithm Development: Developing efficient quantum algorithms is a complex and specialized field. Not all problems can be sped up by quantum computers, and identifying which problems will benefit most is an active area of research.

- Lack of Standardization: The field is still evolving, with different hardware approaches and programming frameworks, leading to a lack of standardization.

- “Noisy Intermediate-Scale Quantum” (NISQ) Era: We are currently in an era where quantum computers have a limited number of qubits and are not yet fault-tolerant, meaning they are prone to errors and can only handle specific, sometimes niche, problems.

Key Takeaways

- Quantum computers use qubits, which can exist in superposition (both 0 and 1 simultaneously) and be entangled with other qubits, allowing for exponentially more computational power than classical computers.

- These capabilities promise to solve complex problems currently intractable for classical machines, particularly in areas like drug discovery, materials science, and optimization.

- Key quantum phenomena like superposition and entanglement are the source of their power but also lead to fragility due to decoherence (sensitivity to environmental noise) and high error rates.

- Current quantum computers are in the “Noisy Intermediate-Scale Quantum” (NISQ) era, meaning they have a limited number of qubits and are not yet fully error-corrected.

- Major technological challenges include building stable qubits, increasing qubit count, improving qubit quality (fidelity), and developing effective error correction techniques.

- Promising hardware approaches include superconducting qubits, trapped ions, photonic qubits, and topological qubits.

- While quantum computers threaten current encryption methods, they also pave the way for new quantum-resistant cryptography.

Future Outlook: The Road Ahead

The field of quantum computing is progressing at a remarkable pace, but it’s important to manage expectations. We are not likely to see quantum computers replacing our laptops or smartphones anytime soon. Instead, their impact will initially be felt in specialized scientific and industrial applications. Experts anticipate a gradual transition, where access to quantum computing will be primarily through cloud-based platforms, much like high-performance computing is accessed today.

The near-term future (the next 5-10 years) will likely see the development of more powerful NISQ devices capable of demonstrating “quantum advantage” for specific, well-defined problems. This means showing a demonstrable benefit over the best classical algorithms for a particular task. Researchers are focused on improving qubit stability, increasing the number of qubits, and refining quantum error correction techniques. The goal is to eventually achieve fault-tolerant quantum computing, where errors can be managed to the point where complex, long-running algorithms can be executed reliably.

In the longer term, fault-tolerant quantum computers could revolutionize entire industries. Imagine designing personalized medicines by precisely simulating how a drug interacts with a patient’s unique biology, or creating novel materials with properties never before seen, from advanced batteries to self-healing concrete. The potential for scientific discovery is staggering. In fields like artificial intelligence, quantum computers could accelerate the training of machine learning models and unlock new forms of AI that are currently unimaginable.

However, the timeline for achieving these advanced capabilities is still uncertain. Many breakthroughs are still needed in hardware engineering, quantum algorithm development, and software infrastructure. The race is on, with nations and corporations investing heavily, recognizing the strategic and economic importance of this technology.

Call to Action

The world of quantum computing is no longer confined to academic laboratories; it’s rapidly becoming a reality with profound implications for society. As a reader, staying informed about these developments is crucial. Consider exploring introductory resources, following reputable science news outlets, and perhaps even engaging with online courses or communities focused on quantum information science.

For businesses and researchers, now is the time to explore how quantum computing might impact your field. While widespread adoption is still some way off, understanding the potential applications and starting to experiment with quantum simulators or cloud-based quantum hardware can provide a significant strategic advantage. The revolution is underway, and those who embrace learning and adaptation will be best positioned to harness its transformative power.

Leave a Reply

You must be logged in to post a comment.