A Deeper Dive into Why AI Sometimes Gets It Wrong – and What It Means for Us

The allure of artificial intelligence is undeniable, promising a future of enhanced productivity and groundbreaking discoveries. Yet, a persistent challenge shadows its progress: the phenomenon of AI “hallucinations,” where models confidently generate plausible but factually incorrect information. While some recent discourse has pointed to a simple explanation—that models are trained on data—a more nuanced understanding reveals a complex interplay of factors, offering a richer perspective on AI’s limitations and its potential for genuine usefulness.

The Allure and Pitfall of Training Data

It’s a foundational truth that AI models learn from the vast datasets they are trained on. These datasets, often scraped from the internet, reflect the entirety of human knowledge, creativity, and, unfortunately, misinformation and biases. When a model “hallucinates,” it’s not necessarily fabricating from thin air. Instead, it’s often extrapolating patterns, making confident predictions based on incomplete or conflicting information within its training data.

Think of it like a student who has memorized countless facts but hasn’t yet developed the critical thinking skills to discern between contradictory sources or to synthesize information in a truly novel way. The model is, in essence, generating text that is statistically probable based on its training, but not necessarily grounded in verifiable reality. This is a critical distinction from outright invention. The model is responding to the prompt by constructing a coherent narrative that *appears* correct, drawing on the statistical relationships it has learned.

Why “Simple Training Data” Isn’t the Whole Story

While the training data is undoubtedly the bedrock of AI behavior, attributing hallucinations solely to it oversimplifies the issue. Several other critical factors contribute to the unpredictable nature of AI responses:

* **Model Architecture and Parameters:** The intricate design of neural networks, including the number of layers, the complexity of their connections, and the specific algorithms used for training, all influence how information is processed and generated. Different architectures might be more prone to certain types of errors or biases.

* **Inference and Generation Processes:** Even with the same training data, the way a model is prompted (the “inference” process) and how it generates its output (e.g., sampling strategies, temperature settings) can significantly impact the factual accuracy of its response. A more creative or “exploratory” setting might lead to more novel, but potentially less accurate, outputs.

* **The Nature of Language Itself:** Human language is inherently ambiguous and context-dependent. AI models, while adept at pattern recognition, still struggle with the full spectrum of human understanding, including sarcasm, subtle irony, and the nuanced implications of context. This can lead to misinterpretations and subsequent inaccuracies.

* **Lack of True Understanding:** Crucially, current AI models do not possess consciousness or a genuine understanding of the world. They are sophisticated pattern-matching machines. When they produce incorrect information, it’s a failure of pattern recognition or statistical prediction, not a deliberate act of deception or a sign of sentience.

The Spectrum of AI Errors: From Minor Glitches to Major Misinformation

It’s important to categorize the types of errors AI can make. Some hallucinations are minor, akin to a slightly garbled sentence or a misplaced comma. Others, however, can be more severe, leading to the generation of entirely fabricated events, non-existent people, or incorrect scientific explanations. The severity often depends on the specific domain of knowledge and the model’s exposure to accurate data within that domain. For instance, a model trained on extensive medical literature might still struggle with niche or rapidly evolving medical research, potentially generating outdated or incorrect advice.

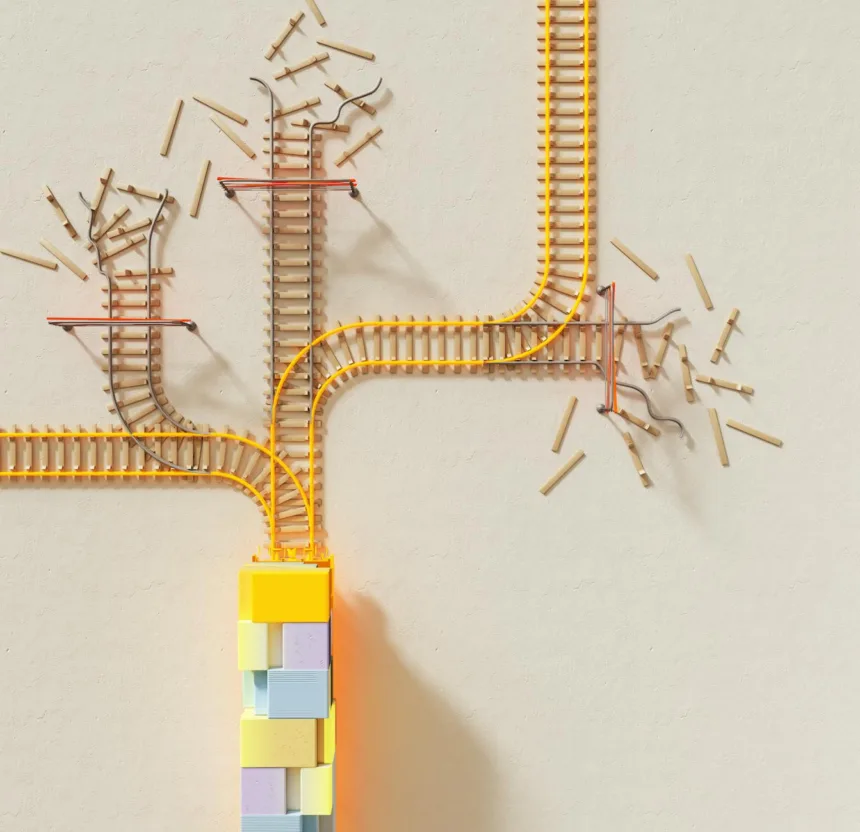

Tradeoffs: The Price of Sophistication

The very sophistication that makes AI powerful also contributes to its fallibility. The ability of large language models to generate fluent and convincing prose means that their errors can be particularly insidious. A poorly written, factually incorrect statement is easier to spot than a beautifully crafted, yet entirely fabricated, one.

This presents a significant tradeoff: users often desire AI that can generate creative and contextually relevant content, but this very capability can lead to an increased risk of factual inaccuracies. The challenge lies in finding the right balance between generative power and factual grounding.

Implications for the Future of Information and Technology

The ongoing struggle with AI hallucinations has profound implications:

* **The Need for Verification:** It underscores the absolute necessity of human oversight and critical evaluation of AI-generated content, especially in sensitive areas like news, healthcare, and education. Relying solely on AI for factual information is a risky proposition.

* **Evolving AI Development:** Researchers are actively working on techniques to mitigate hallucinations, such as reinforcement learning from human feedback (RLHF), retrieval-augmented generation (RAG), and fact-checking mechanisms integrated into the generation process.

* **User Education:** Educating the public about the limitations of AI is crucial to fostering responsible adoption and preventing the spread of misinformation. Understanding that AI is a tool, not an oracle, is paramount.

Practical Advice for Navigating AI-Generated Content

As AI tools become more ubiquitous, adopting a critical and discerning approach is essential.

* **Treat AI output as a first draft, not a final decree.** Always cross-reference information with reputable sources.

* **Be skeptical of overly confident pronouncements.** If something sounds too good to be true, or too definitive without clear sourcing, it warrants extra scrutiny.

* **Understand the limitations of the specific AI tool you are using.** Different models have different strengths and weaknesses.

* **When in doubt, consult human experts.** For critical decisions or complex topics, the wisdom of experienced professionals remains irreplaceable.

Key Takeaways

* AI hallucinations arise from complex factors, not solely from training data.

* Model architecture, inference processes, and the inherent ambiguity of language play significant roles.

* The fluency of AI output can make its errors harder to detect.

* Human oversight and critical evaluation of AI-generated content are indispensable.

* Ongoing research aims to improve AI’s factual accuracy.

What’s Next in AI Accuracy?

The journey to more reliable AI is far from over. We can expect continued advancements in techniques for fact-checking, bias detection, and grounding AI outputs in verifiable knowledge. The development of more transparent and explainable AI systems will also be crucial in understanding and rectifying errors. As these tools evolve, so too must our understanding and our critical engagement with them.

References

* OpenAI API Documentation: While not directly on hallucinations, OpenAI’s documentation provides insights into the models’ capabilities and limitations, implicitly touching on how their design influences output.

* Large Language Models Can Generate Text (Google AI Blog): This post discusses the generative capabilities of LLMs, a precursor to understanding their potential for error.

* Sparks of Artificial General Intelligence: Early experiments with GPT-4 (arXiv): This research paper, while focused on broader capabilities, touches upon the emergent behaviors and potential limitations observed in advanced LLMs, including their propensity for error in certain contexts.