Taming Complexity: How Regularization Prevents Overfitting and Boosts Model Performance

In the intricate world of machine learning, building models that accurately predict unseen data is the ultimate goal. However, a common pitfall stands in the way: overfitting. This is where a model learns the training data too well, capturing not just the underlying patterns but also the noise and random fluctuations specific to that dataset. Consequently, such a model performs poorly when presented with new, unfamiliar data. This is precisely where regularization emerges as a critical technique, acting as a disciplined guide to prevent our models from becoming overly complex and brittle.

Anyone involved in building or deploying machine learning models, from data scientists and machine learning engineers to even business analysts interpreting model outputs, should care deeply about regularization. Its principles are fundamental to achieving reliable and generalizable AI systems. Without it, even the most sophisticated algorithms can fall prey to the seductive trap of overfitting, leading to unreliable predictions and flawed decision-making.

This article delves into the essence of regularization, exploring its underlying mechanics, the diverse strategies employed, and the practical considerations for its effective implementation. We will navigate the landscape of regularization, examining its benefits, its inherent trade-offs, and providing actionable insights for its judicious application.

The Problem of Overfitting: A Model Too Familiar with Its Training Data

Imagine a student cramming for an exam by memorizing every single question and answer from past papers. While they might ace a test with identical questions, they would likely struggle with a new exam that tests the same concepts but with different phrasing or new scenarios. This is analogous to an overfit machine learning model.

Overfitting occurs when a model’s complexity exceeds the complexity of the underlying data generation process. High-capacity models, such as deep neural networks with many layers and parameters, are particularly susceptible. They have the flexibility to fit even the most intricate patterns in the training data, including noise that is unique to that specific sample. This leads to:

- High training accuracy: The model performs exceptionally well on the data it was trained on.

- Low validation/test accuracy: The model fails to generalize to new, unseen data, exhibiting poor predictive performance in real-world scenarios.

- Sensitivity to noise: The model learns and amplifies spurious correlations present in the training set.

The goal of machine learning is not to memorize the training data but to learn the underlying relationships that allow for accurate predictions on future observations. Regularization techniques are designed to penalize model complexity, thereby discouraging overfitting and promoting better generalization.

What is Regularization? Penalizing Complexity to Enhance Generalization

At its core, regularization is a set of techniques used to prevent overfitting in machine learning models, particularly in the context of regression and neural networks. It works by adding a penalty term to the model’s loss function. This penalty discourages the model from assigning excessively large weights to its features, effectively simplifying the model and reducing its reliance on specific training data points.

The standard loss function, which the model aims to minimize, typically measures the difference between predicted and actual values (e.g., Mean Squared Error for regression). A regularized loss function is modified as follows:

Regularized Loss = Original Loss + Regularization Term

The regularization term is a function of the model’s coefficients (weights). By adding this term, the optimization process must now balance minimizing the prediction error with minimizing the magnitude of the model’s weights. This forces the model to be more parsimonious, using simpler explanations for the data rather than complex ones that might just be fitting noise.

Types of Regularization: L1, L2, and Beyond

Several types of regularization exist, each with its unique way of penalizing model complexity. The most prominent are L1 and L2 regularization, often referred to as Lasso and Ridge regression, respectively, in the context of linear models.

L2 Regularization (Ridge Regression): Shrinking Weights

L2 regularization adds a penalty proportional to the square of the magnitude of the model’s coefficients (weights) to the loss function. The formula for the L2 penalty is:

L2 Penalty = λ * Σ(wᵢ²)

Where:

- λ (lambda) is the regularization parameter, a hyperparameter that controls the strength of the penalty. A higher λ means a stronger penalty.

- wᵢ represents the individual weights (coefficients) of the model.

Analysis: L2 regularization tends to shrink all coefficients towards zero but rarely makes them exactly zero. This means that all features are retained in the model, but their influence is reduced. This leads to a smoother, less sensitive model. It’s particularly effective when many features have small to moderate effects.

L1 Regularization (Lasso Regression): Feature Selection Through Sparsity

L1 regularization adds a penalty proportional to the absolute value of the model’s coefficients to the loss function. The formula for the L1 penalty is:

L1 Penalty = λ * Σ(|wᵢ|)

Where:

- λ (lambda) is the regularization parameter.

- wᵢ represents the individual weights (coefficients) of the model.

Analysis: The key difference of L1 regularization lies in its ability to drive some coefficients exactly to zero. This effectively performs feature selection, removing irrelevant or redundant features from the model. This results in a sparser model, which can be more interpretable and computationally efficient. L1 regularization is advantageous when you suspect that many of your features are irrelevant or highly correlated.

Contested Point: While L1 excels at feature selection, its behavior can be less stable than L2 when dealing with highly correlated features. In such cases, it might arbitrarily select one feature and zero out others, even if they carry similar predictive information. L2 tends to distribute the shrinkage more evenly among correlated features.

Elastic Net: Combining L1 and L2

Elastic Net regularization is a hybrid approach that combines both L1 and L2 penalties. It offers a compromise, providing the feature selection capabilities of L1 while retaining the shrinkage stability of L2. The penalty term is a weighted sum of L1 and L2 penalties.

Analysis: Elastic Net is often a good default choice when the number of features is large or when dealing with multicollinearity (highly correlated features), as it can select groups of correlated features together.

Other Regularization Techniques in Deep Learning

Beyond L1 and L2, deep learning employs several other powerful regularization methods:

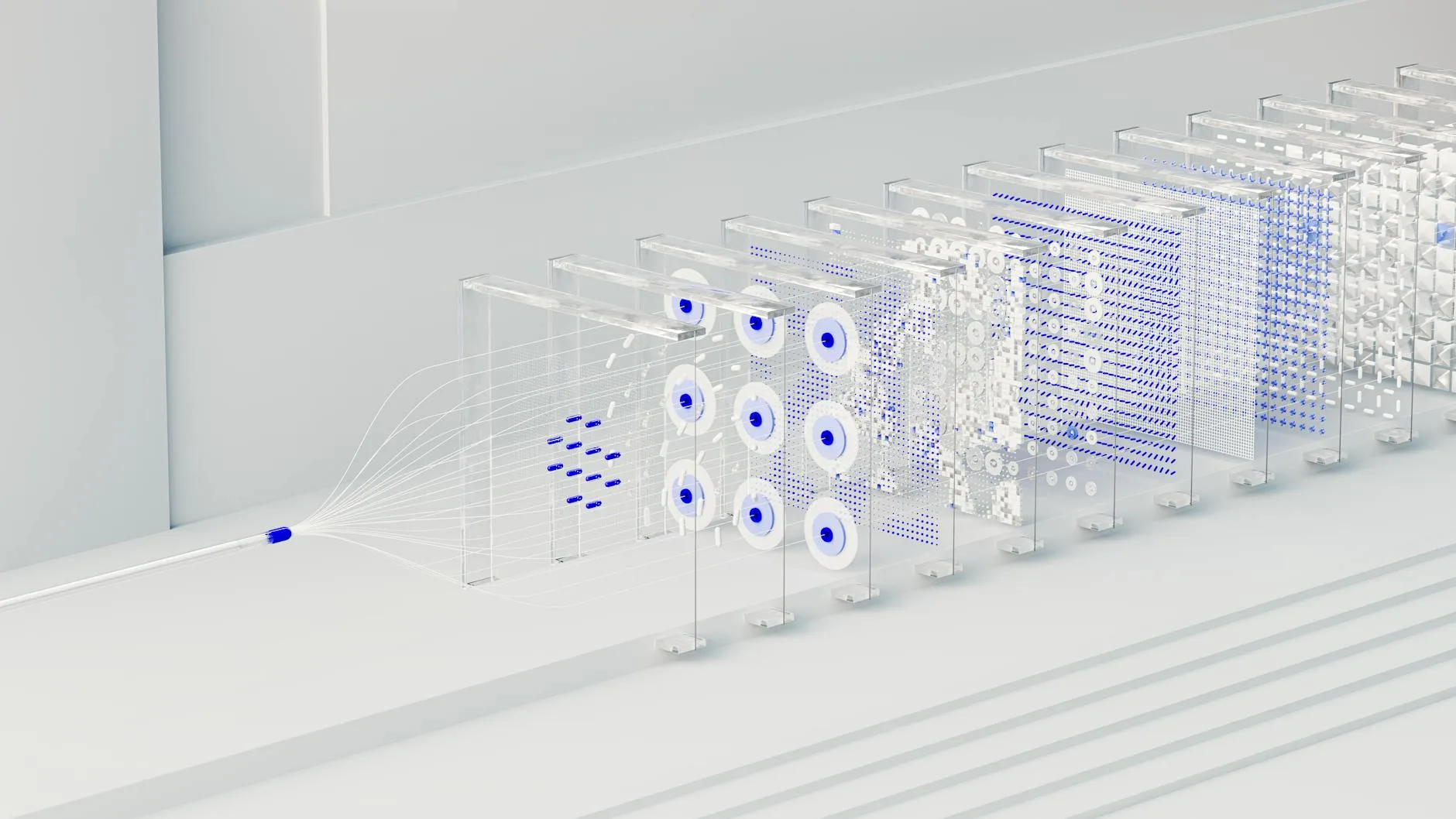

- Dropout: During training, randomly deactivates a fraction of neurons (and their connections) in each layer for each training batch. This forces the network to learn redundant representations and prevents co-adaptation of neurons. The dropped-out neurons are temporarily ignored, and their activations are set to zero.

- Early Stopping: Monitors the model’s performance on a validation set during training. Training is halted when the performance on the validation set begins to degrade, even if the training loss is still decreasing. This prevents the model from continuing to train into the overfitting regime.

- Data Augmentation: Artificially increases the size and diversity of the training dataset by applying various transformations (e.g., rotations, flips, crops, color jittering for images). This exposes the model to a wider range of variations, making it more robust and less prone to memorizing specific training examples.

- Batch Normalization: Normalizes the activations of a layer across the mini-batch. This can stabilize training, allow for higher learning rates, and acts as a mild regularizer by adding a small amount of noise.

Why Regularization Matters: Beyond Preventing Simple Errors

The importance of regularization extends far beyond simply improving a model’s score on a test dataset. It is crucial for building trustworthy and deployable AI systems:

- Improved Generalization: This is the primary benefit. Regularized models are more likely to perform well on unseen data, making them reliable for real-world applications.

- Reduced Variance: Overfit models have high variance, meaning their performance can change drastically with small changes in the training data. Regularization reduces this variance, leading to more stable and predictable models.

- Feature Selection and Interpretability: L1 regularization, in particular, can help identify and remove irrelevant features, leading to simpler, more interpretable models. This is invaluable when understanding *why* a model makes certain predictions is as important as the prediction itself.

- Handling High-Dimensional Data: In datasets with many features (high dimensionality), overfitting is a significant risk. Regularization helps manage this complexity effectively.

- Robustness to Noise: By penalizing large weights, regularization prevents the model from latching onto noisy patterns in the training data, making it more robust.

Trade-offs and Limitations of Regularization

While powerful, regularization is not a magic bullet and comes with its own set of considerations:

- Underfitting: If the regularization strength (λ) is too high, the model might become too simplistic, failing to capture even the underlying patterns in the data. This leads to underfitting, where the model performs poorly on both training and test data.

- Hyperparameter Tuning: The regularization strength (λ) is a hyperparameter that needs to be carefully tuned. This often requires cross-validation, adding computational overhead. The optimal λ can vary significantly depending on the dataset and the model.

- Loss of Information: Aggressive regularization (especially L1) can lead to the exclusion of potentially useful features, which might be important in certain contexts or for specific subsets of data.

- Bias-Variance Trade-off: Regularization primarily aims to reduce variance at the cost of potentially introducing a small amount of bias. The goal is to find an optimal balance.

- Computational Cost: While L1 can lead to sparser models that are faster to infer with, the training process for regularized models can be slightly more computationally intensive than their non-regularized counterparts, especially during hyperparameter search.

Practical Advice and Cautions for Applying Regularization

Successfully implementing regularization requires a thoughtful approach:

- Start with a Baseline: Train a model without any regularization to establish a baseline performance.

- Cross-Validation is Key: Use techniques like k-fold cross-validation to tune the regularization parameter (λ) and select the best performing model on unseen data.

- Feature Scaling: Regularization methods that penalize coefficient magnitudes (L1, L2) are sensitive to the scale of features. Ensure your features are scaled (e.g., using standardization or min-max scaling) before applying regularization.

- Experiment with Different Techniques: Don’t be afraid to try different types of regularization (L1, L2, Elastic Net) and their combinations to see what works best for your specific problem.

- Monitor for Underfitting: If your regularized model performs poorly on both training and validation sets, you might have over-regularized. Consider reducing the regularization strength or trying a more complex model.

- Understand Your Data: If interpretability is crucial, lean towards L1 regularization. If predictive accuracy with many correlated features is the priority, L2 or Elastic Net might be better.

- Deep Learning Specifics: For neural networks, experiment with dropout rates, batch normalization, and early stopping. Data augmentation is almost always beneficial for image and text data.

Key Takeaways

- Regularization is a vital technique to prevent overfitting in machine learning models, ensuring better generalization to unseen data.

- Overfitting occurs when a model learns the training data too well, including noise, leading to poor performance on new data.

- L1 regularization (Lasso) promotes sparsity and performs feature selection by driving some coefficients to zero.

- L2 regularization (Ridge) shrinks coefficients towards zero without making them exactly zero, leading to smoother models.

- Elastic Net combines L1 and L2 penalties, offering benefits of both.

- Deep learning employs techniques like dropout, early stopping, and data augmentation for regularization.

- The key trade-off is between reducing model variance (overfitting) and potentially introducing bias (underfitting).

- Proper feature scaling and systematic hyperparameter tuning (e.g., via cross-validation) are crucial for effective regularization.

References

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction by Trevor Hastie, Robert Tibshirani, and Jerome Friedman. This is a foundational textbook covering regularization in depth, particularly in the context of linear models. Available online: https://hastie.su.domains/ElemStatLearn/

- Scikit-learn Documentation on Regularization: Provides detailed explanations and examples of L1, L2, and Elastic Net regularization within the popular Python machine learning library. https://scikit-learn.org/stable/modules/linear_model.html#regularization

- Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Chapter 7 discusses regularization techniques extensively, including dropout, data augmentation, and other methods crucial for deep neural networks. Available online: https://www.deeplearningbook.org/