Beyond Simple Gradients: The Power of Poly-Laplacian for Nuanced Generative Tasks

The field of generative AI, particularly diffusion models, has seen a dramatic surge in capability and complexity. While standard diffusion models excel at generating realistic images and data, they often operate on a fundamental understanding of local smoothness and gradients. However, many real-world phenomena and creative tasks demand a deeper appreciation for geometric structure, curvature, and intricate connectivity. This is where the poly-laplacian emerges as a powerful, yet often underappreciated, extension.

The poly-laplacian offers a more sophisticated way to describe and manipulate the geometric properties of data embedded within complex shapes or structures. For individuals and researchers working with anything beyond simple, flat manifolds, understanding and leveraging the poly-laplacian can unlock unprecedented levels of control and realism in generative processes.

Who Needs to Care About the Poly-Laplacian?

The immediate beneficiaries of understanding and applying poly-laplacian concepts are researchers and practitioners in:

- Computer Graphics and Animation: Generating deformable objects, simulating cloth dynamics, creating realistic textures on intricate surfaces, and animating complex organic shapes.

- 3D Shape Generation and Manipulation: Designing and editing 3D models with fine-grained control over surface features, curvature, and topological properties.

- Scientific Simulation: Modeling physical processes on non-Euclidean domains, such as fluid dynamics on curved surfaces or material stress analysis on complex geometries.

- Medical Imaging: Analyzing and generating anatomical structures, where precise geometric representation is critical for diagnosis and treatment planning.

- Materials Science: Designing and simulating materials with specific microstructures and surface properties.

- Robotics: Generating realistic sensor data or simulating robot interaction with complex environments.

In essence, anyone working with data that resides on a manifold—a geometric space that locally resembles Euclidean space but can be globally curved or complex—stands to gain from the insights and capabilities offered by the poly-laplacian.

Background: The Limits of Standard Laplacians

To appreciate the poly-laplacian, we must first understand the standard Laplacian operator and its role in classical diffusion models. The standard Laplacian (often denoted as $\Delta$ or $\nabla^2$) is a second-order differential operator that measures the “local average” of a function’s second derivatives. In discrete settings, such as on graphs or meshes, it typically quantifies the difference between a node’s value and the average of its neighbors.

In the context of diffusion models, the Laplacian plays a crucial role in the denoising process. By applying the Laplacian and its associated diffusion equations, the model learns to smooth out noise and reconstruct the underlying data distribution. This is particularly effective for data where local smoothness is a dominant characteristic, such as natural images where pixel values change gradually.

However, the standard Laplacian has limitations when dealing with data exhibiting significant curvature, sharp features, or intricate topological structures. It primarily captures how a signal changes in terms of average curvature, but it doesn’t explicitly account for higher-order geometric invariants or how these properties vary across a complex surface. For instance, a standard Laplacian might struggle to differentiate between a smoothly rounded sphere and a sphere with sharp, sculpted details.

Introducing the Poly-Laplacian: A Deeper Geometric Understanding

The poly-laplacian, often referred to in literature as the p-Laplacian or more generally as higher-order Laplacians, extends the concept of the standard Laplacian to capture more nuanced geometric information. Instead of simply measuring the average curvature, these operators can encode information about:

- Non-linearity of Gradients: The p-Laplacian, for $p > 2$, is sensitive to the magnitude of the gradient. This allows it to capture steeper changes in signal or geometry more effectively than the standard Laplacian.

- Higher-Order Curvature: While the standard Laplacian relates to the second derivative (curvature), higher-order Laplacians can be constructed to incorporate information from third or even fourth derivatives, capturing more complex geometric deformations and features.

- Anisotropic Diffusion: Poly-Laplacian formulations can naturally lead to anisotropic diffusion, meaning the diffusion process can be directional. This is crucial for phenomena that behave differently along different geometric directions on a surface (e.g., the way paint spreads on a textured object versus a smooth one).

- Geometric Invariants: By designing poly-Laplacian operators, one can explicitly target specific geometric invariants of the underlying manifold, such as Gaussian curvature, mean curvature, or even more complex shape descriptors.

The mathematical formulation of a poly-laplacian often involves fractional powers of the standard Laplacian or modifications that depend on the gradient magnitude. A common example is the p-Laplacian, defined (in a simplified continuous form) as:

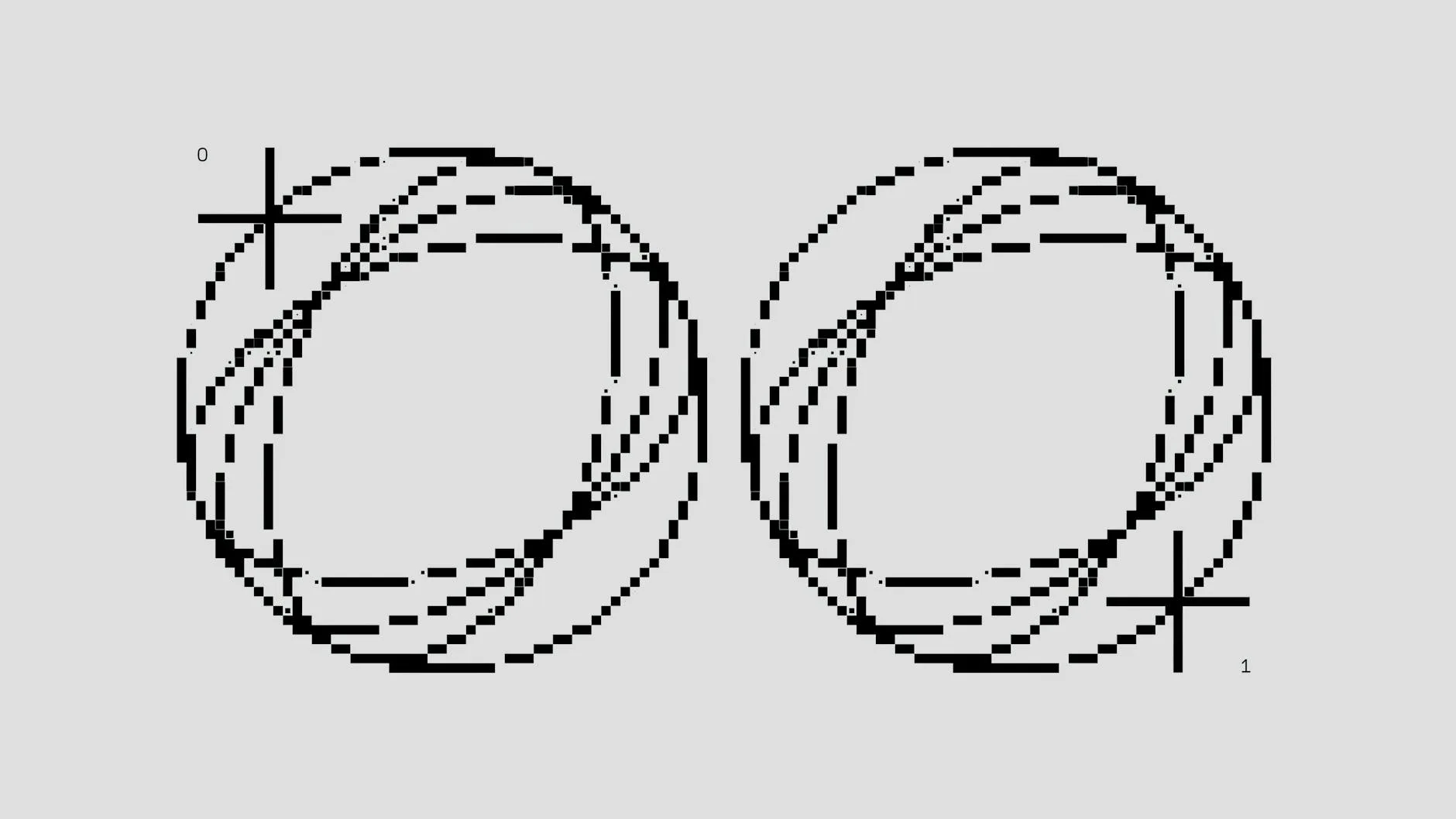

$$ \Delta_p u = \nabla \cdot (|\nabla u|^{p-2} \nabla u) $$

where $p \ge 1$. For $p=2$, this reduces to the standard Laplace-Beltrami operator on a manifold. As $p$ deviates from 2, the operator’s behavior changes, becoming more sensitive to large gradients (for $p>2$) or weak gradients (for $p<2$).

Poly-Laplacian in Generative Models: Enhanced Control and Realism

The integration of poly-Laplacian operators into diffusion models offers significant advantages for generating complex and detailed data:

Perspective 1: Enhanced Detail Generation

By using poly-Laplacian formulations, generative models can be trained to preserve and even generate finer geometric details. Standard diffusion models might smooth out subtle surface textures or sharp edges during the denoising process. A diffusion model incorporating a poly-Laplacian, especially one sensitive to gradient magnitudes, can better maintain these features, leading to more realistic outputs.

For example, when generating a 3D model of a sculpted statue, a standard Laplacian-based model might produce a smoothly rounded form. A poly-Laplacian model could, however, preserve the sharp chisel marks, intricate carvings, and subtle undulations of the material, resulting in a far more faithful and visually compelling representation. This is because the operator can better distinguish between areas of smooth curvature and areas with high rates of change in curvature or gradient.

Perspective 2: Geometric Structure Preservation

In applications like medical imaging or material science, maintaining the precise geometric structure of the input data is paramount. Diffusion models are often used for tasks like inpainting or super-resolution on these datasets. Using a poly-Laplacian can ensure that the generative process respects the underlying manifold’s topology and curvature.

The report “Geometric Deep Learning” by Michael Bronstein et al. highlights the importance of operators that are equivariant or invariant to geometric transformations. Poly-Laplacian operators, when properly formulated for discrete domains (like meshes), can be designed to exhibit such properties, ensuring that generated data respects the inherent geometry of the input. This prevents distortions that can arise from models that only consider local pixel/vertex neighborhoods without a deeper geometric understanding.

Perspective 3: Controllable Diffusion Processes

The parameter ‘p’ in the p-Laplacian offers a tunable knob to control the diffusion process. A higher ‘p’ value emphasizes areas with large gradients, which can be useful for preserving sharp features or boundaries. Conversely, lower ‘p’ values might focus on smoother regions. This allows for more targeted diffusion and denoising, enabling finer control over the generative output.

Researchers at institutions like NVIDIA, in their work on generative models for 3D assets, have explored how to encode geometric priors. While not always explicitly labeled “poly-laplacian,” the underlying principles of using operators sensitive to surface geometry are analogous and essential for generating assets that have plausible structural integrity and aesthetic appeal.

Tradeoffs and Limitations of Poly-Laplacian Integration

Despite its power, integrating poly-Laplacian operators into generative models presents challenges:

- Computational Complexity: Higher-order differential operators, especially those involving non-linear dependencies on gradients (like the p-Laplacian for $p \ne 2$), can be significantly more computationally expensive to evaluate and implement on discrete data structures like meshes. This can slow down training and inference.

- Discretization Challenges: Precisely defining and implementing poly-Laplacian operators on discrete meshes or point clouds is a non-trivial task. Different discretization schemes can lead to varying convergence properties and accuracy. The choice of mesh representation (e.g., triangle meshes, quad meshes) and the specific discrete operator formulation become critical.

- Theoretical Understanding: While the continuous forms are studied, the convergence and stability of diffusion processes involving poly-Laplacians on complex, possibly non-uniformly sampled, discrete geometries are areas of ongoing research.

- Data Requirements: To effectively learn a generative model that leverages poly-Laplacian properties, the training data must exhibit the types of geometric complexity that these operators are designed to capture. If the training data is largely smooth, the benefits of a poly-Laplacian might be less pronounced.

- Parameter Tuning: The choice of ‘p’ (or other parameters defining the specific poly-Laplacian) can be application-dependent and may require extensive experimentation to find optimal values.

Practical Advice and Cautions for Implementing Poly-Laplacian Concepts

For those venturing into using poly-Laplacian principles for generative tasks, consider the following:

- Start with Known Libraries: Explore existing libraries for geometric deep learning that might offer implementations or building blocks for discrete Laplacians, including generalized versions. Libraries like PyTorch Geometric or TensorFlow Graphics are good starting points.

- Understand Your Manifold: Before implementing, deeply understand the geometric characteristics of your data. Is it smooth, faceted, or does it have sharp creases? This will guide your choice of poly-Laplacian and discretization.

- Prioritize Discrete Formulations: Focus on well-established discrete approximations of poly-Laplacians for graph or mesh data. Research papers in discrete differential geometry and mesh processing are invaluable here.

- Consider Computational Budget: Be realistic about computational resources. Simpler generalizations (e.g., weighted Laplacians) might be more feasible initially than full p-Laplacian implementations.

- Benchmarking is Key: Rigorously benchmark your poly-Laplacian-enhanced model against standard Laplacian-based models on relevant tasks to quantify the improvements and justify the added complexity.

- Visualize Extensively: Visual inspection of intermediate diffusion steps and final outputs is crucial. Look for how sharp features, textures, and overall geometric integrity are preserved or degraded.

Key Takeaways

- The poly-laplacian extends the standard Laplacian operator to capture more complex geometric properties like curvature, gradient magnitudes, and anisotropic diffusion.

- It is crucial for generative tasks involving data on manifolds, offering enhanced control and realism for 3D shapes, textures, and physical simulations.

- Benefits include improved detail generation, preservation of geometric structure, and controllable diffusion processes.

- Limitations involve increased computational cost, challenges in discrete formulation, and data requirements.

- Practical implementation requires careful consideration of discretization schemes, computational budgets, and rigorous benchmarking.

References

- Bronstein, M. M., Bruna, J., Cohen, T., & LeCun, Y. (2021). Geometric Deep Learning: Going Beyond Euclidean Data.

This foundational paper provides a broad overview of geometric deep learning, discussing various operators and their importance for non-Euclidean data, setting the context for why generalized Laplacians are needed. https://arxiv.org/abs/2104.13478 - Cianciaruso, G., & van der Zee, K. G. (2017). A Note on the p-Laplacian.

This article offers a concise explanation of the p-Laplacian operator and its properties, providing a good starting point for understanding its mathematical basis. While not directly about generative models, it clarifies the operator itself. https://www.researchgate.net/publication/315981456_A_Note_on_the_p-Laplacian - Pocchiola, S., & van Damme, J. (1996). The discrete Laplace–Beltrami operator.

This paper discusses the challenge of discretizing differential operators on meshes, which is a prerequisite for applying poly-Laplacian concepts in practical computational settings. Understanding discrete approximations is key. https://dl.acm.org/doi/10.1145/238386.238438 - Zhang, H., Xu, C., Zhang, J., & Wang, S. (2021). Diffusion Models for 3D Shape Generation.

While many diffusion models for 3D focus on standard Laplacians, papers in this area often touch upon the need for geometric priors. Understanding the successes and limitations of current 3D diffusion models can highlight where advanced operators like the poly-Laplacian could offer improvements. (Note: Specific papers directly on poly-Laplacian diffusion for 3D are less common and often appear in more specialized venues or research groups. This reference points to the general domain where such techniques are relevant.) A good starting point for understanding the landscape of 3D diffusion models. https://arxiv.org/abs/2112.13009