Beyond Resilience: Understanding the Core Principles of Robustness for Systems That Don’t Just Recover, They Endure

In an increasingly interconnected and unpredictable world, the ability of systems to withstand disturbances and maintain their functionality is paramount. While terms like “resilience” and “anti-fragility” often dominate the discourse, the foundational concept of robustness offers a distinct and critical lens through which to design and evaluate our most vital infrastructures, software, and organizations. Robustness is not merely about bouncing back; it’s about not breaking in the first place, ensuring consistent performance despite expected and even unexpected challenges.

Why Robustness Matters and Who Should Care

Robustness refers to a system’s capacity to continue operating effectively under varying conditions, including the presence of errors, faults, or significant external perturbations. It’s the intrinsic strength that resists degradation, preventing failure rather than merely recovering from it. For example, a robust bridge is designed to endure extreme weather events without damage, whereas a resilient bridge might require significant repair after such an event.

The stakes are high. In an era marked by cyber threats, climate change impacts, supply chain disruptions, and economic volatility, the demand for truly robust systems spans every sector. From critical national infrastructure like power grids and communication networks to financial trading platforms, healthcare systems, and autonomous vehicles, a failure in robustness can have catastrophic consequences, leading to economic collapse, loss of life, or widespread societal disruption. Therefore, engineers, software architects, urban planners, policymakers, business leaders, and risk managers all share a vested interest in understanding, implementing, and continually improving robustness in the systems they oversee.

The Enduring Pursuit of Stable Systems: Background and Context

The concept of robustness has deep roots across various disciplines. In classical engineering, it manifested as the choice of strong materials and over-dimensioning components to resist anticipated loads and stresses. The early 20th century saw the emergence of control theory, where “robust control” aimed to design systems that maintain stability and performance despite uncertainties in their models or external disturbances.

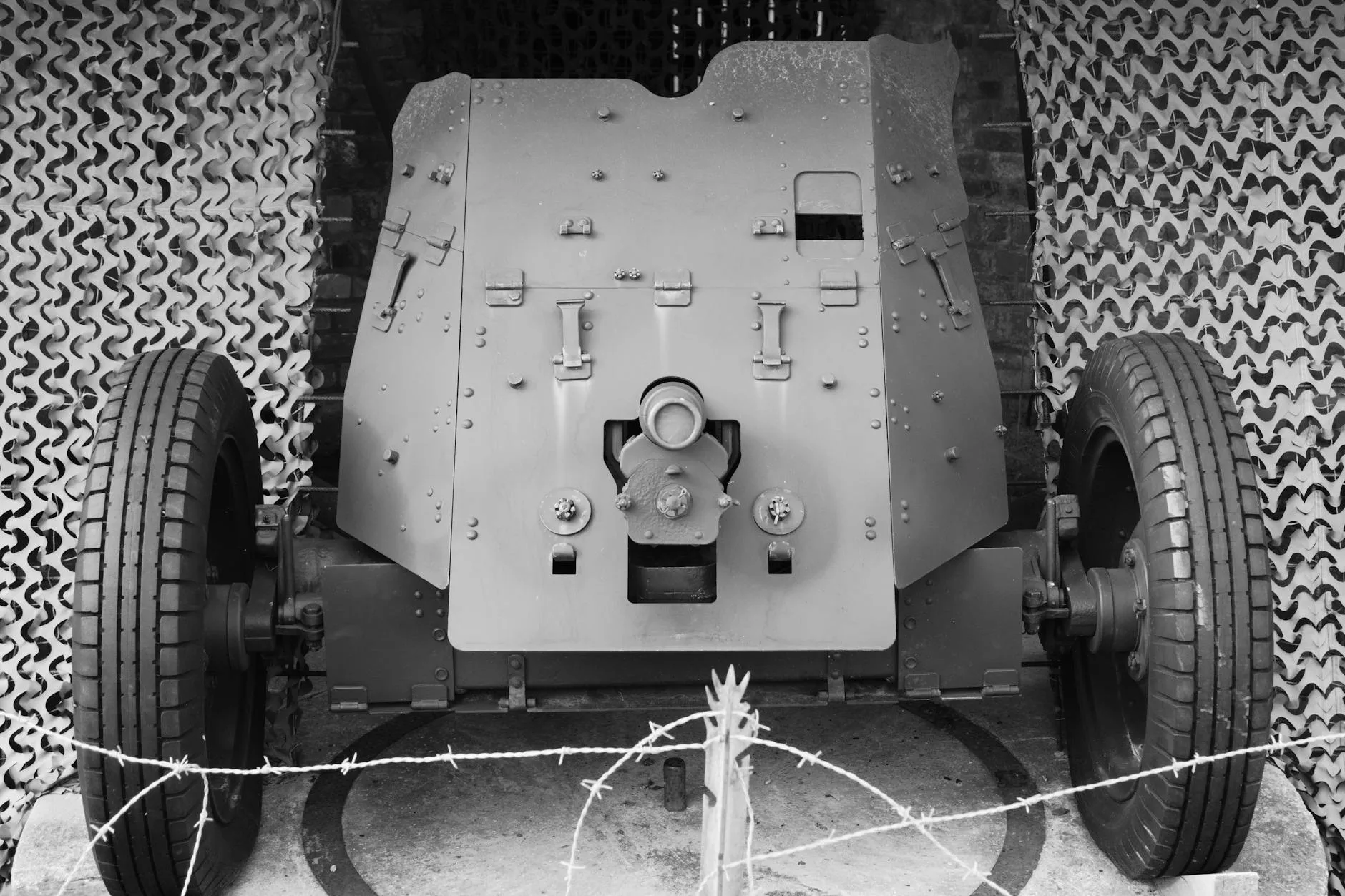

With the advent of computing, robustness evolved into fault tolerance and error handling—mechanisms ensuring that software and hardware could continue operating even when components failed or unexpected inputs were received. Military and aerospace applications, in particular, pioneered designs for extreme reliability and survivability. More recently, ecological science has highlighted the robustness of biodiverse ecosystems to external shocks, demonstrating how variety and redundancy contribute to stability.

Today, the convergence of complex adaptive systems, global interconnectedness, and accelerated rates of change makes the pursuit of robustness more critical than ever. The increasing reliance on digital technologies means that a single point of failure can propagate rapidly, underscoring the need for systemic strength.

Unpacking Robustness: Multidisciplinary Perspectives and Analysis

Understanding robustness requires a multidisciplinary approach, as its principles manifest differently yet coherently across various fields.

Engineering and Infrastructure Robustness

In traditional engineering, robust design emphasizes the ability of physical structures and mechanical systems to resist failure. This involves:

- Material Science: Using materials with high strength, ductility, and fatigue resistance.

- Redundancy: Incorporating backup components or parallel systems that can take over if a primary one fails (e.g., multiple power lines, redundant bridges).

- Factor of Safety: Designing components to be significantly stronger than theoretically required to handle unexpected loads or material imperfections.

- Fault-Tolerant Design: Architecting systems where the failure of one component does not lead to the failure of the entire system. For instance, according to a report by the National Institute of Standards and Technology (NIST) on Critical Infrastructure Resilience, ensuring robust infrastructure often involves a layered approach to protection and redundancy.

Software and Cybersecurity Robustness

In software engineering and cybersecurity, robustness is crucial for system reliability and security. Key aspects include:

- Defensive Programming: Writing code that anticipates invalid inputs, errors, and exceptional conditions, handling them gracefully to prevent crashes or vulnerabilities.

- Fault-Tolerant Architectures: Designing distributed systems that can continue operating even if individual servers or network components fail. This includes techniques like replication, automatic failover, and self-healing mechanisms.

- Error Correction: Implementing algorithms that can detect and sometimes correct data corruption during transmission or storage.

- Input Validation: Rigorously checking all external inputs to prevent malicious attacks (e.g., SQL injection, buffer overflows) or unintended system behavior.

- Modularity and Isolation: Breaking down complex systems into independent modules so that a failure in one module doesn’t propagate throughout the entire system.

Economic and Financial Robustness

In finance, robustness refers to the ability of portfolios, markets, or entire economies to withstand shocks without collapsing. This is often achieved through:

- Diversification: Spreading investments across different asset classes, industries, and geographies to reduce vulnerability to a single market downturn.

- Stress Testing: Subjecting financial institutions and portfolios to hypothetical severe economic scenarios to assess their ability to withstand adverse conditions.

- Regulatory Buffers: Mandating capital reserves for banks to absorb losses, as outlined by frameworks like Basel Accords, aiming for systemic financial robustness.

- Decentralization: Reducing single points of failure in financial infrastructure, though this also introduces complexity.

Distinguishing Robustness from Related Concepts

While often used interchangeably, it’s vital to differentiate robustness from similar concepts:

- Robustness vs. Resilience: Robustness is about preventing failure; resilience is about recovery after failure. A robust system doesn’t break; a resilient system bounces back quickly if it does. Both are desirable, but robustness is a first line of defense.

- Robustness vs. Anti-fragility: Nassim Nicholas Taleb’s concept of anti-fragility suggests systems that *benefit* from disorder and improve under stress. Robust systems resist damage; anti-fragile systems grow stronger. A simple example: a robust glass resists shattering; an anti-fragile bone gets stronger with micro-fractures.

According to general systems theory, a system’s robustness is often a function of its complexity, feedback loops, and the diversity of its components. Systems with multiple, independent pathways to achieve a goal are inherently more robust.

The Tradeoffs and Limitations of Robustness

While the benefits of robustness are clear, achieving it often involves significant tradeoffs and limitations:

- Cost: Building highly robust systems can be expensive. Redundancy requires more hardware, stronger materials cost more, and extensive testing adds to development budgets. These costs must be weighed against the potential cost of failure.

- Complexity: Introducing redundancy, fault tolerance, and error handling mechanisms inherently increases the complexity of a system. More components, more lines of code, and more interdependencies can make systems harder to design, debug, maintain, and understand, potentially introducing new vulnerabilities.

- Efficiency: Redundant systems can be less efficient. For example, duplicating data across multiple servers consumes more storage and network bandwidth. Voting systems that require consensus among multiple components can introduce latency.

- Over-engineering: It’s possible to over-engineer for robustness, leading to systems that are unnecessarily complex, costly, and potentially rigid. A system that is robust against every conceivable (and inconceivable) threat might be impractical or unable to adapt to genuinely novel challenges.

- Adaptability vs. Robustness: Sometimes, excessive robustness can hinder a system’s adaptability. A highly rigid, robust structure might resist known stresses but be unable to reconfigure or evolve to meet entirely new environmental conditions or threat vectors. This is a point of ongoing debate in fields like software architecture and urban planning.

- Unknown Unknowns: Robustness primarily addresses *known* failure modes and anticipated variations. It may not protect against truly unprecedented “black swan” events, although a well-designed robust system can sometimes absorb unforeseen shocks better than a fragile one.

Understanding these tradeoffs is crucial for making informed design decisions, striking a balance between robustness, cost, performance, and adaptability.

Practical Advice: Cultivating Robustness in Your Systems

Implementing robust design principles requires foresight, systematic analysis, and continuous vigilance. Here’s a practical guide:

A Checklist for Designing Robust Systems:

- Identify Critical Functions: What absolutely *must* remain operational? Prioritize these functions for robustness.

- Anticipate Failure Modes (FMEA): Conduct a Failure Mode and Effects Analysis (FMEA) or similar risk assessment. What can go wrong? How likely is it? What are the impacts? Consider hardware, software, human error, environmental factors, and malicious attacks.

- Implement Redundancy:

- Component Redundancy: Duplicate critical hardware or software modules.

- Data Redundancy: Use RAID, data replication, or distributed ledgers.

- Process Redundancy: Have backup procedures or alternative workflows.

- Network Redundancy: Multiple network paths and providers.

- Employ Fault Isolation and Containment: Design systems so that a failure in one component or subsystem does not propagate to others. Use bulkheads, firewalls, microservices, and strong API contracts.

- Build in Error Detection and Correction: Implement checksums, parity bits, and robust logging. Ensure systems can detect when something is amiss and, if possible, self-correct or fail gracefully.

- Validate Inputs Rigorously: Never trust external inputs. Validate data format, range, type, and source to prevent common vulnerabilities and errors.

- Simplify and Modularize: Reduce complexity wherever possible. Simpler systems have fewer points of failure and are easier to reason about, test, and maintain. Break systems into loosely coupled modules.

- Design for Graceful Degradation: If a full failure cannot be prevented, design the system to degrade gracefully, maintaining essential functions even if non-critical ones are impaired.

- Test Thoroughly (Stress & Adversarial Testing):

- Stress Testing: Subject the system to extreme loads beyond typical operating conditions.

- Chaos Engineering: Intentionally inject failures into a production system to find weaknesses before they cause outages, a practice popularized by Netflix.

- Adversarial Testing: Simulate attacks to test cybersecurity robustness.

- Monitor and Alert Continuously: Implement comprehensive monitoring of system health, performance, and security. Set up alerts for anomalies to enable rapid response to developing issues.

Important Cautions:

- Don’t Assume Robustness: Design for it, test for it, and verify it continually.

- Human Factors: A robust technical system can be undermined by human error, lack of training, or poor operational procedures.

- Continuous Process: Robustness is not a one-time project. It requires ongoing assessment, adaptation, and investment as threats evolve and systems change.

Key Takeaways for Enduring Systems

- Robustness is Foundational: It’s about preventing failure and maintaining functionality despite disturbances, serving as the first line of defense for any critical system.

- Distinct from Resilience and Anti-fragility: While related, robustness focuses on resistance to degradation rather than recovery or benefiting from chaos.

- Multidisciplinary Application: Essential in engineering, software, finance, and beyond, adapting its principles to each domain’s unique challenges.

- Requires Intentional Design: Achieved through redundancy, fault tolerance, diligent error handling, and rigorous testing.

- Involves Tradeoffs: Cost, complexity, and efficiency must be carefully balanced against the desired level of robustness and risk tolerance.

- A Continuous Journey: Building and maintaining robust systems is an ongoing process of analysis, implementation, and adaptation.

References and Further Reading

- National Institute of Standards and Technology (NIST) Special Publication 800-160: Systems Security Engineering – Considerations for a Multidisciplinary Approach to the Engineering of Trustworthy Secure Systems – Provides comprehensive guidance on engineering secure and resilient (including robust) systems.

- IEEE Transactions on Automatic Control – Papers on Robust Control Theory – A peer-reviewed journal featuring academic research on designing control systems that perform reliably despite model uncertainties and disturbances.

- O’Reilly Media: Building Resilient Microservices – Chapter on Fault Tolerance and Robustness – A practical guide to designing robust software architectures, specifically in the context of distributed systems.

- Bank for International Settlements (BIS) – Basel Accords Overview – Official documentation on international banking regulations designed to enhance the robustness and stability of the global financial system.

- The Black Swan: The Impact of the Highly Improbable by Nassim Nicholas Taleb – Explores concepts of fragility, robustness, and anti-fragility in the face of rare, unpredictable events.

- O’Reilly Media: Chaos Engineering: Building Confidence in System Behavior through Experiments – Details methodologies for proactively testing system robustness by introducing controlled failures.